Table of Contents

- Understanding Code Coverage Metrics

- Influence of Code Coverage Metrics on Unit Testing

- Basic Coverage Criteria and Its Role in Unit Testing

- Advanced Coverage Criteria: Multiple Condition and Modified Condition/Decision Coverage

- Parameter Value Coverage: A Detailed Analysis

- How Code Coverage Metrics Guide Refactoring Decisions

- Balancing Quality and Quantity: The Role of Code Metrics in Workload Management

- Evolving Requirements: Adapting Test Frameworks Based on Code Metrics

Introduction

Code coverage metrics play a crucial role in software development, aiding developers in assessing the effectiveness of their testing efforts and guiding important decisions such as refactoring and workload management. These metrics provide quantifiable measures of the proportion of code that has been tested, helping developers identify under-tested areas and potential bugs. By understanding code coverage metrics, developers can optimize their testing strategies, improve code quality, and ensure the reliability of their software.

In this article, we will explore various aspects of code coverage metrics and their impact on software development. We will delve into different types of coverage metrics, including statement coverage, branch coverage, condition coverage, and advanced metrics like Multiple Condition Coverage (MCC) and Modified Condition/Decision Coverage (MC/DC). We will also discuss how code coverage metrics guide refactoring decisions, influence unit testing strategies, and aid in workload management. By understanding the importance and implications of code coverage metrics, developers can enhance their testing practices and deliver high-quality, reliable software

1. Understanding Code Coverage Metrics

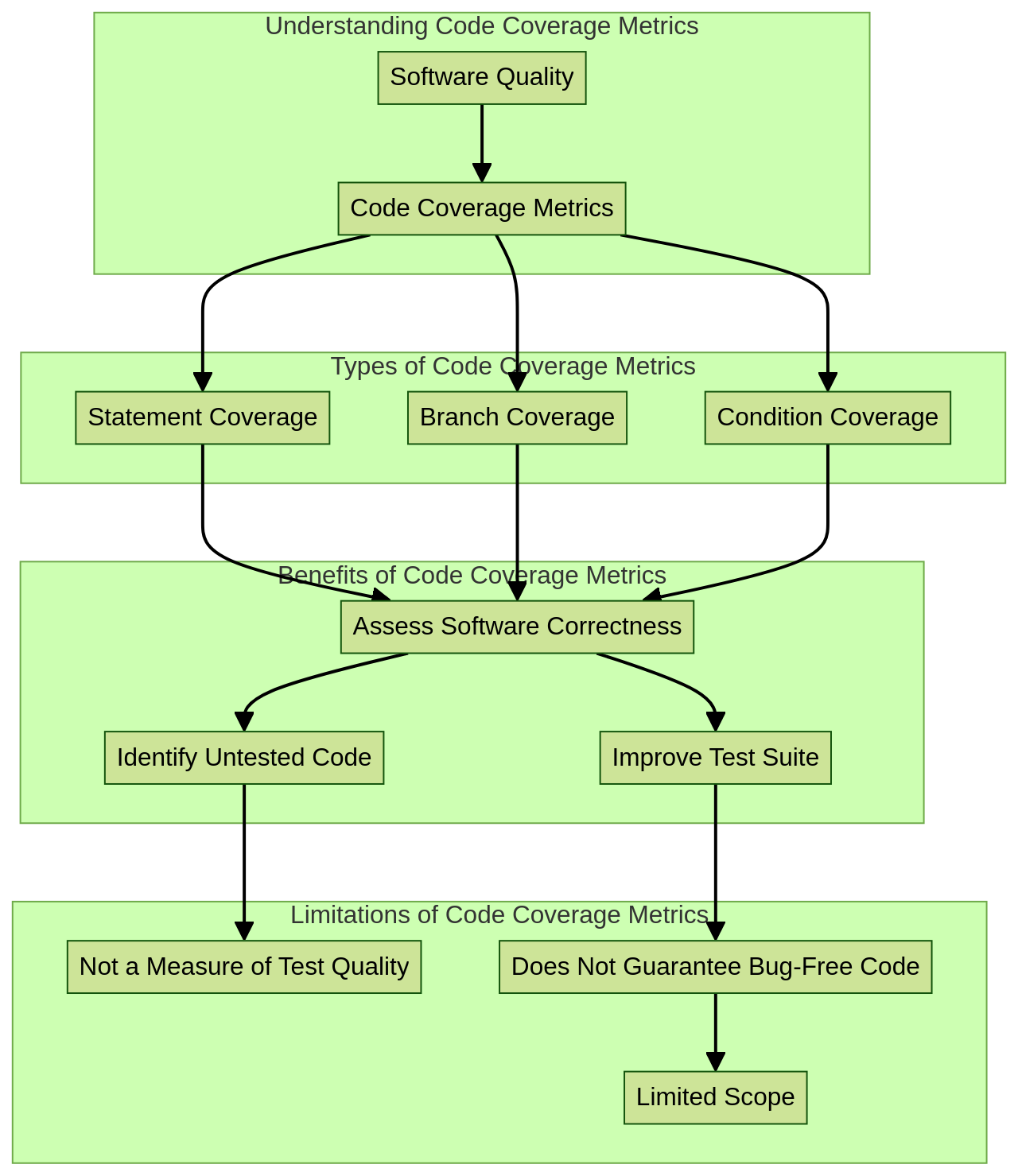

The evaluation of software quality and the effectiveness of unit testing hinge significantly on the utilization of code coverage metrics. These metrics provide a quantifiable measure of the proportion of code that has been tested, thereby offering a tangible understanding of the unit testing process's efficiency. The generation of these metrics is based on the execution of a variety of test cases, and encompasses measurements such as statement coverage, branch coverage, and condition coverage.

Statement coverage is a widespread code coverage metric which calculates the percentage of executable code lines during tests, thereby monitoring which code lines are tested. This form of coverage ensures that each statement in the program has been executed at least once during testing. Examples of statement coverage in code testing include the execution of each 'if' statement, switch statement, loop statement, and function call in the code. This gives testers the confidence that all the statements in the code have been tested and evaluated for correctness.

Branch coverage, another form of code coverage, ensures that each potential branch of code is visited during the testing process. It focuses on measuring the percentage of branches in the code that have been executed at least once. There are various techniques and tools available to measure branch coverage, such as code coverage analysis tools. These tools scrutinize the code and track which branches have been executed during testing. By examining the coverage report generated by these tools, developers and testers can identify areas of the code that need additional testing to improve branch coverage.

Condition coverage, conversely, necessitates tests to evaluate every possible outcome of a condition present within a control structure. Each condition within a decision statement should be evaluated as both true and false at least once. This ensures that all possible combinations of conditions are tested, providing confidence in the correctness and reliability of the code.

Modified Condition Decision Coverage (MC/DC), a more advanced coverage type, combines branch and condition coverage. This form of coverage is typically used in safety-critical projects and is recommended by international standards. It's also noteworthy that a tool known as Symflower generates tests for different coverage types, including MC/DC coverage. It also has the capability to detect potential bugs like null pointer exceptions and arithmetic exceptions. However, it's essential to keep in mind that achieving high code coverage does not necessarily guarantee a flawless program.

[Code coverage](https://en.wikipedia.org/wiki/Code_coverage) serves as a crucial aspect in assessing software quality, but it should be augmented with other testing techniques. It aids in identifying untested or unused code, gauging the quality of test suites, identifying potential bugs, and recognizing dead code. Conversely, test coverage ensures that all critical business requirements are tested and that there are no major gaps in testing. Test coverage can be measured qualitatively, assessing how well tests cover business requirements, specifications, product features, risks, and functional requirements.

Both code coverage and test coverage have limitations, such as not guaranteeing code quality or bug-free software, and requiring different tools for different programming languages. They are critical metrics in determining software correctness, and their use depends on the project's requirements. [Code coverage](https://en.wikipedia.org/wiki/Code_coverage) is preferred during development to ensure all lines of code are tested, while test coverage is more suitable for assessing the effectiveness of tests and overall code quality.

Understanding these metrics is vital for developers since they can pinpoint areas in the code that may not have been adequately tested, thereby uncovering potential risks and vulnerabilities.

Furthermore, integrating code coverage analysis into the development process can be done using various tools and techniques. Tools like JaCoCo provide detailed reports on the code coverage achieved during testing, including line, branch, and method coverage. These tools can be integrated into build systems like Maven or Gradle, allowing developers to generate code coverage reports as part of the build process.

The choice between code coverage and test coverage depends on the specific situation, but both are crucial elements in a comprehensive testing strategy. By measuring the proportion of code that is executed during testing, developers can identify areas of the code that remain untested and potential bugs that may arise. This helps in ensuring comprehensive test coverage and improving the overall quality and reliability of the software. Additionally, code coverage metrics can also aid in identifying redundant or unused code, leading to better code optimization and maintenance

2. Influence of Code Coverage Metrics on Unit Testing

[Code coverage](https://en.wikipedia.org/wiki/Code_coverage) metrics are instrumental in shaping unit testing strategies. They furnish developers with the ability to discern which portions of the code have been rigorously scrutinized and which sections necessitate further exploration. This comprehension enables developers to fine-tune their testing efforts, directing their attention towards sections of the code that are untested or inadequately tested. Such a strategic approach ensures judicious resource utilization and aids in maintaining superior code quality.

In the realm of unit testing, you can employ a variety of tools and techniques to measure code coverage. A frequently used tool is a code coverage tool, which can examine your code and provide insights on the extent of your code that is executed during the unit tests. These tools can generate reports and metrics that display the percentage of code coverage, enabling you to identify areas that are not covered by the tests.

Moreover, code coverage metrics can also guide the selection of testing tools and frameworks, as different tools offer diverse levels of code coverage analysis. For instance, tools such as JaCoCo, Cobertura, Istanbul, Codecov, and Coverlet are popular for their ability to provide insights into which parts of the code are being tested and which parts are not. They can generate reports and visualizations to help identify areas that need more testing.

However, achieving 100% code coverage is not always practical or beneficial. A singular focus on high code coverage figures can lead to a misleading sense of security. Therefore, it is crucial to invest in high-quality tests, rather than merely aiming for high code coverage. Engineering time is a valuable resource and should be directed towards the most critical and relevant tasks. Remember that not all code is created equal, and certain modules may require more exhaustive testing than others.

While Google's best practices suggest 60% as "acceptable," 75% as "commendable," and 90% as "exemplary" code coverage, most repositories employing Codecov find that their code coverage values tend to decrease above 80%. Therefore, it's advisable to aim for a code coverage value between 75% and 85%. Tools like Codecov offer features such as automatic coverage gates, high patch checks, and impact analysis, which can significantly enhance code coverage. Prioritizing high-quality tests fosters a robust testing culture and mitigates the occurrence of bugs in the production environment.

In lieu of fixating on achieving 100% code coverage, the emphasis should be on a coverage value that leads to a better-tested codebase, such as 80%. [Code coverage](https://en.wikipedia.org/wiki/Code_coverage) reporters furnish details about the lines of code that were not run and the percentage of lines of code executed over the total number of lines in the codebase. For instance, a test that touches 6 out of 10 lines of code in a codebase will have a code coverage percentage of 60. However, achieving 100% code coverage is not always beneficial, and a value around 80 is a more practical target. Sole reliance on the code coverage percentage can create a false sense of security.

High code coverage without good tests can be detrimental. Engineering time is costly and finite, so it is essential to prioritize the most important and relevant tasks. Not all code is equal, and crucial modules should be prioritized for writing high-quality tests. Most repositories that use Codecov find that their code coverage values slide downwards when they are above 80% coverage. Therefore, it's advisable to aim for a coverage value between 75-85%.

Tools like Codecov can automatically set up coverage gates on the entire codebase. The Codecov patch check measures the coverage of lines of code changed in a code change. Features like the fileviewer in the Codecov dashboard can identify files and directories with low test coverage. Impact analysis can pinpoint critical code files that are frequently run in production. Setting a goal of 100% code coverage can drain an engineering organization. The focus should be on writing high-quality tests that cover the most critical parts of the codebase. Writing good tests leads to a strong testing culture and fewer bugs in production

3. Basic Coverage Criteria and Its Role in Unit Testing

Unit testing is a critical part of software development, and two of the most important metrics used to assess its effectiveness are statement coverage and branch coverage.

Statement coverage is a quantitative measure of how many executable statements are tested, serving as a primary indicator of how much of the codebase has been examined. To achieve high statement coverage, it's vital to design test cases that cover all execution paths and edge cases. [Code coverage](https://en.wikipedia.org/wiki/Code_coverage) tools can be invaluable in identifying untested areas of the code, allowing for test case refinement. Prioritizing test cases based on their potential impact and their likelihood of uncovering bugs also plays a significant role in achieving high statement coverage.

Branch coverage, on the other hand, is a more nuanced metric that measures whether every branch in the codebase has been exercised by the tests. Branches in code refer to decision points like 'if' and 'switch' statements. Achieving high branch coverage is crucial as it helps to identify potential gaps or bugs in the code that may not be caught by other forms of testing. Tools like JaCoCo, Cobertura, and Emma can be used to measure branch coverage, helping developers identify areas of the code that have not been adequately tested and improve the quality of their unit tests.

The relationship between branch coverage and cyclomatic complexity, which measures the number of possible paths of execution in a block of code, can be particularly insightful. This relationship can help determine the minimum number of test cases required for comprehensive testing of a piece of code.

However, it's crucial to remember that high statement and branch coverage are not guarantees of test quality or expected code behavior. They merely provide a baseline measure of how thoroughly the code has been tested. Therefore, they should be evaluated alongside other valuable metrics to gain a comprehensive view of code quality. Platforms like Linearb offer metrics tracking and observability for engineering processes, providing a more holistic view of code quality. These platforms can help developers ensure that the majority of the code is tested, thereby reducing the likelihood of undetected bugs or issues.

While striving for high statement and branch coverage should be seen as a starting point, achieving these does not mark the end of the quest for quality code and effective unit testing. It's also essential to interpret the results of these coverage metrics correctly. By understanding the coverage achieved and identifying areas that have not been adequately tested, developers can gain insights into the effectiveness of their test cases and identify potential gaps or areas for improvement in their testing strategy.

Thus, achieving high statement and branch coverage in unit testing is a continuous process, requiring careful planning, comprehensive test design, and effective use of tools and metrics. It's a crucial part of ensuring high-quality, reliable code that can stand up to real-world use

4. Advanced Coverage Criteria: Multiple Condition and Modified Condition/Decision Coverage

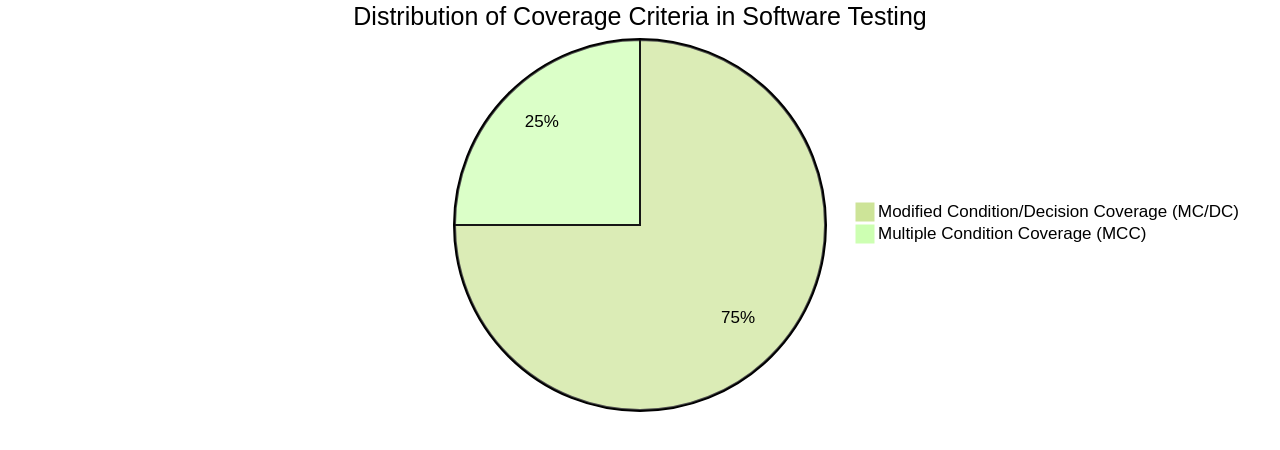

Advanced code coverage metrics, such as Multiple Condition Coverage (MCC) and Modified Condition/Decision Coverage (MC/DC), provide a deeper understanding of the intricacies of the code during testing.

MCC examines every possible combination of condition outcomes within a decision, ensuring a comprehensive evaluation of the code. On the other hand, MC/DC verifies the impact of each condition within a decision, offering a high degree of assurance in code, especially in complex or critical systems.

To implement MCC in software testing, it is essential to create test cases covering all possible combinations of conditions within a decision point. This method increases the chances of identifying defects in the software. MCC has been effectively applied in real-world projects for testing different combinations of input parameters, logical conditions, and boundary values. However, achieving high coverage with MCC requires adherence to best practices for unit testing, such as identifying all possible conditions, writing test cases for each condition, using code coverage tools, prioritizing test cases, and regularly reviewing and updating test cases.

Tools like Cobertura, JaCoCo, and Clover can be instrumental in measuring MCC. While MCC has advantages such as increased test coverage and improved defect detection, it also comes with complexities like increased test complexity, limited scalability, and limited effectiveness. Thus, the decision to use MCC should consider these trade-offs.

MC/DC, on the other hand, measures the effectiveness of a test suite in exercising the decision points in a program. It is applied in critical systems, such as aviation and medical fields, to ensure that software responds correctly to various inputs and conditions. Achieving high coverage with MC/DC involves the use of test coverage tools, test case design techniques, test oracles, branch testing, and mutation testing.

The use of these advanced metrics is not limited to enhancing the robustness of the code but also in preventing bugs and syntax errors. However, the application should align with the organization's objectives, considering the programming languages and libraries available. Industry regulations may also necessitate a specific benchmark level for code coverage in safety-critical systems. Hence, the professional maturity and experience of the development team, along with the size and maturity of the team, play a critical role in implementing these advanced metrics effectively.

Remember, while MCC and MC/DC can significantly enhance the testing process, they are tools that assist in determining what still needs to be tested and reviewing the quality of existing tests. They should not be viewed as a measure of the codebase's quality. Instead, their value lies in their ability to provide insights that can guide the development of high-quality, reliable software

5. Parameter Value Coverage: A Detailed Analysis

Parameter Value Coverage (PVC) is a vital metric in software testing that quantifies the extent to which the potential input values of a function undergo testing. Various inputs can trigger different execution paths within a function, making PVC an essential tool in software robustness and reliability.

For instance, the software vulnerability in Atlassian Confluence, CVE-2023-22515, is a testament to the importance of PVC. This vulnerability, due to an error filtering issue in the SafeParametersInterceptor class, allowed an unauthenticated remote attacker to bypass xwork functionality and create new administrative user accounts. Comprehensive PVC could have mitigated this vulnerability.

In the context of parameter sensitive plan (PSP) optimization in SQL Server, PVC's concept comes into play. PSP optimization can address performance issues caused by if branches in specific circumstances. Differently bucketed parameter values can result in different plans, each with different compile and runtime values.

To ensure comprehensive PVC in software testing, it's crucial to consider the different potential values that each parameter can take. Systematically choosing values for each parameter ensures a wide range of scenarios are covered during testing, which aids in identifying potential issues or bugs in the software.

Approaches such as boundary value analysis and equivalence partitioning can improve PVC. These techniques help identify the boundaries of input parameter values and divide them into equivalent groups for testing. Combinatorial testing techniques, like pairwise or orthogonal array testing, can reduce the number of test cases while still achieving high coverage. Random testing, where inputs are generated randomly, can cover a wide range of parameter values. Automation tools and frameworks streamline the process of generating and executing test cases with different parameter values.

Measuring PVC in software testing is achievable with various tools that analyze the range of input values for parameters in a software system and provide metrics on coverage achieved during testing. Tools like mutation testing tools, code coverage tools, and model-based testing tools can offer valuable insights into the thoroughness of software testing efforts and help improve the overall quality of the tested software.

To achieve high PVC, it's important to follow best practices for unit testing. Techniques such as boundary value analysis, equivalence partitioning, and combinatorial testing ensure a wide range of parameter values are tested, covering different scenarios effectively. Proper assertions and test data generation strategies increase the coverage of parameter values in tests.

Low PVC can negatively impact software quality. When PVC is low, it means not all possible parameter values have been tested, potentially leading to undiscovered bugs or vulnerabilities in the software. High PVC during testing improves overall software quality and reliability.

In test planning, prioritizing PVC involves considering various factors like the frequency of parameter usage, the impact of parameter values on the system, and the likelihood of parameter failures. Techniques like equivalence partitioning and boundary value analysis identify representative values for each parameter, ensuring comprehensive coverage.

Automated techniques for achieving high PVC can be implemented using strategies such as boundary value analysis, equivalence partitioning, and combinatorial testing. These techniques systematically select test cases that cover a wide range of parameter values, ensuring different combinations and values are thoroughly tested. Leveraging these automated techniques improves the efficiency of unit testing efforts, leading to more robust and reliable software

6. How Code Coverage Metrics Guide Refactoring Decisions

[Code coverage](https://en.wikipedia.org/wiki/Code_coverage) metrics are an integral part of a software engineer's toolkit, particularly when making decisions about refactoring. They help identify under-tested sections of the codebase that could benefit from refactoring, which may involve simplifying complex code structures, breaking down large functions into smaller, more manageable pieces, or enhancing code modularity.

Refactoring is a crucial aspect of software development, serving as a tool for managing technical debt, enhancing code readability, and making the codebase more maintainable. However, determining when to refactor can be tricky. It's a subjective decision that demands careful consideration and should be based on whether it will deliver value in a reasonable timeframe.

Approaches to refactoring include adhering to the Test-Driven Development (TDD) cycle, which involves writing a failing test (red), making it pass (green), and then refactoring the code. It's also recommended to incorporate refactoring as part of feature implementation or bug fixing, rather than as a separate task. This way, refactoring can be done continuously but only for what needs to be altered.

[Code coverage](https://en.wikipedia.org/wiki/Code_coverage) metrics play a pivotal role in this decision-making process, providing quantitative data that can guide refactoring decisions.

Learn how code coverage metrics can guide your refactoring decisions.

However, it's crucial to remember that high code coverage doesn't necessarily equate to bug-free code. The quality of the tests is also important. For instance, Google recommends 60% coverage as "acceptable," 75% as "commendable," and 90% as "exemplary." Most repositories using Codecov find that their code coverage values decline when they exceed 80% coverage.

Refactoring driven by code coverage metrics can result in more maintainable and testable code, ultimately enhancing the overall software quality. However, it's essential to adopt a pragmatic approach to refactoring, focusing on what needs to be modified, as suggested by Nicolas Carlo. Refactoring shouldn't be viewed as a constant time sink but as a means to facilitate faster and safer changes.

To utilize code coverage metrics for refactoring, a comprehensive set of unit tests for your codebase is essential. These tests should cover as much of your code as possible to provide an accurate measure of code coverage.

Once you have your unit tests in place, a code coverage tool can measure the percentage of your code covered by these tests. This information can help you prioritize your refactoring efforts, focusing on areas of your code with low code coverage, likely to have a higher risk of bugs and issues. Improving the test coverage in these areas can make your code more robust and easier to maintain.

In addition to identifying areas for improvement, code coverage metrics can also help you assess the impact of your refactoring efforts. After making changes to your code, you can re-run your tests and measure the change in code coverage. If the coverage increases, it indicates that your refactoring has had a positive impact and has improved the overall quality of your code.

Overall, code coverage metrics are a valuable tool for guiding your refactoring efforts. By using these metrics to identify areas for improvement and assess the impact of your changes, you can make your codebase more robust and maintainable

7. Balancing Quality and Quantity: The Role of Code Metrics in Workload Management

[Code coverage](https://en.wikipedia.org/wiki/Code_coverage) metrics are pivotal in software testing, providing insights into the portion of code executed during the testing process. However, striving for 100% code coverage can be impractical and resource-intensive. Instead, code coverage should be seen as a means to enhance software quality, assisting in identifying and eliminating bugs and syntax errors.

This view aligns with Google's perspective, which sets 60% code coverage as "acceptable," 75% as "commendable," and 90% as "exemplary." These benchmarks guide developers towards realistic and effective coverage goals. It's noteworthy that repositories using codecov often see a decline in coverage percentage when they exceed 80% coverage.

While code coverage is essential, it shouldn't overshadow test quality. High coverage percentages may create a false sense of security if the tests don't thoroughly verify the results' correctness. A test suite with 100% coverage might merely provide surface-level tests, missing significant edge cases. Therefore, focusing on critical and complex parts of the code should be a priority to ensure thorough testing. Regular review and refactoring of tests to ensure they're concise, maintainable, and provide meaningful assertions can improve overall test quality.

Acknowledging the finite and costly nature of engineering time, it's crucial to allocate it wisely. Not all code is equal—certain modules, especially those dealing with sensitive areas like billing or personal data, require more rigorous testing. Impact analysis tools, which combine runtime data with coverage information, can help identify these critical code files and direct testing efforts accordingly.

When setting code coverage goals, numerous factors must be considered. These include the programming languages and libraries used, the size and experience of the development team, and industry-specific regulatory requirements. For example, the aviation standard DO-178B and the functional safety standard IEC 61508 mandate 100% code coverage for safety-critical systems.

A more nuanced approach to code coverage is thus recommended. Instead of aiming for 100% coverage, strive for around 80% that leads to a better-tested codebase and fewer bugs in production. Coupled with high-quality tests that examine the most crucial parts of the codebase, this approach fosters a robust testing culture and ultimately yields a more reliable software product.

In addition to code coverage, other code metrics such as cyclomatic complexity, code duplication, and code churn can provide valuable insights for effective testing. Regular monitoring and analyzing of these code metrics can continuously improve the testing process and ensure a high-quality, maintainable, and testable codebase

8. Evolving Requirements: Adapting Test Frameworks Based on Code Metrics

As the software landscape perpetually transforms, test frameworks must keep pace with these shifts. Code metrics serve as a compass in this adaptation process, offering invaluable insights into code quality and maintainability. For example, if code metrics reveal high complexity in certain code segments, it may be beneficial to bolster testing methodologies or tools for these areas.

Simultaneously, if metrics indicate regular modifications in specific parts of the code, it might be wise to deploy automated tests to ensure their robustness throughout the evolution process. Automated tests can notably enhance the efficiency of the test suite, as exemplified by Riedel Communications Austria, where three specific tests out of an automated suite of 1500 tests identified ten bugs.

Static test case prioritization, as demonstrated by elevating the priority of new tests and those that have recently failed, can be a potent strategy. Linking system tests to the software's code through bug tickets can further enhance this process, allowing for prioritization based on recently modified code.

However, it's vital to remember that comprehensive system tests on embedded software can be both time-consuming and expensive. Therefore, tests with a higher likelihood of uncovering bugs should be prioritized. In scenarios where coverage information is unavailable or unattainable, approximation methods can serve as viable alternatives. Metrics related to testing and debugging, such as bug lifecycle and test records, can be systematically collected and stored in a database to assist with this analysis.

Dynamic test case prioritization can be achieved by reordering pending tests based on the most recent test verdict. This reordering can significantly improve the efficiency of the test suite. While rule mining and artificial intelligence can be employed for dynamic test case prioritization, simpler approaches can also yield effective results.

By consistently monitoring and responding to code metrics, developers can ensure their test frameworks remain effective and relevant, even amid changing software requirements. This continual adaptation process is key to maintaining the robustness and reliability of software in the long run.

Code metrics like cyclomatic complexity, code coverage, and code duplication can be analyzed to make informed decisions on test framework improvements. Areas with high complexity or low code coverage may require additional tests or refactoring. Code duplication can be identified using code metrics, allowing developers to consolidate similar test cases and improve the efficiency of their test frameworks.

Code metrics also aid in prioritizing test cases by identifying the most critical and high-risk areas of the code. Focusing testing efforts on these areas ensures thorough testing of the most important functionality.

Tools like SonarQube, JaCoCo, Checkstyle, and PMD can help evaluate code quality and performance, offering insights into the codebase, identifying potential issues, and suggesting improvements. They offer features such as code coverage analysis, code complexity measurement, coding rule enforcement, and code duplication detection.

To identify areas of high complexity using code metrics, developers can use cyclomatic complexity, which measures the number of linearly independent paths through a program's source code. A higher cyclomatic complexity indicates a higher level of complexity. Other metrics such as the number of dependencies, coupling between modules, and code duplication can also be indicators of high complexity.

Investing in automated tests for evolving code can provide several benefits. By having automated tests in place, developers can quickly detect and fix any regressions or issues that may arise as the code evolves. This can help in ensuring the stability and reliability of the software. Automated tests also provide a safety net for making changes to the codebase, allowing developers to refactor or add new features with confidence that existing functionality will not be broken.

To monitor and respond to code metrics in test frameworks, developers can utilize tools like code coverage tools and static code analysis tools. These tools provide metrics such as cyclomatic complexity, code duplication, and maintainability index. Regularly reviewing and analyzing code metrics can help developers identify areas for improvement, track the progress of their testing efforts, and ensure the overall quality of their codebase.

By improving test effectiveness with code metrics analysis, developers can optimize their test cases. This analysis can help in identifying redundant or ineffective test cases, as well as areas of the code that are not adequately covered by tests. By addressing these issues, developers can enhance the overall effectiveness of the test suite and ensure better test coverage

Conclusion

Code coverage metrics play a crucial role in software development, aiding developers in assessing the effectiveness of their testing efforts and guiding important decisions such as refactoring and workload management. These metrics provide quantifiable measures of the proportion of code that has been tested, helping developers identify under-tested areas and potential bugs. By understanding code coverage metrics, developers can optimize their testing strategies, improve code quality, and ensure the reliability of their software.

One of the main takeaways from this article is that code coverage metrics serve as a valuable tool in software development. They guide developers in making informed decisions about refactoring by identifying under-tested sections of code that may benefit from restructuring or simplification. Additionally, code coverage metrics help developers balance workload management by prioritizing testing efforts based on areas of the code that have low coverage or higher complexity. However, it's important to note that achieving high code coverage does not guarantee bug-free software, and other testing techniques should be used in conjunction with code coverage metrics for comprehensive testing.

To enhance their testing practices and deliver high-quality, reliable software, developers are encouraged to integrate code coverage analysis into their development process using tools like JaCoCo or Cobertura. By regularly measuring and analyzing code coverage metrics, developers can identify areas for improvement, prioritize testing efforts, and make informed decisions about refactoring. Boost your productivity with Machinet. Experience the power of AI-assisted coding and automated unit test generation

AI agent for developers

Boost your productivity with Mate. Easily connect your project, generate code, and debug smarter - all powered by AI.

Do you want to solve problems like this faster? Download Mate for free now.